Prompt Engineering Guide for 2024

Can't-Miss Known Best Practice for "Prompt Engineering" to use in 2024, aggregated from major players like OpenAI, Google, Meta, and Anthropic.

You need a good prompt.

Generative AI will forever more permeate our modern lives. We need to excel at crafting effective prompts. A well-designed prompt can make the difference between a helpful response and a confusing one.

Andrew Ng, a renowned AI expert, suggests that we should treat large language models (LLMs) like fresh graduates. Paraphrasing him, we need to tell them clearly what they need to do and even break down how to do it. This means providing clear guidelines and detailed instructions. According to Google, a good prompt averages around 21 words, though this likely excludes additional examples needed for context.

Many people are used to dashing off a quick, 1- to 2-sentence query to an LLM. In contrast, when building applications, I see sophisticated teams frequently writing prompts that might be 1 to 2 pages long (my teams call them “mega-prompts”) that provide complex instructions to specify in detail how we’d like an LLM to perform a task. (source: DeepLearningAI)

In this blog, we will explore the best practices for creating effective prompts. Whether you're using tools like ChatGPT or Gemini or building custom LLM-based applications, these techniques will help you get the most out of your AI interactions.

The Right Mindset for Prompt Engineering

Creating effective prompts is not about getting it perfect on the first try. Instead, it’s an iterative process of refining and improving. Here are some key mindsets to adopt:

Embrace Iteration

Don’t aim for perfection in one shot. Start with a short, quick prompt and refine it until you are satisfied with the results. Think of it like sculpting—each iteration brings you closer to the final masterpiece. Test edge cases and update prompts to fix bugs.

The Playground Mindset

Approach prompt engineering with a playful mindset. Start quickly and broadly, then narrow down your focus as you identify what works and what doesn’t. This flexibility allows you to discover the most effective way to communicate with the LLM.

Understand the LLM’s Limitations

Remember, LLMs are not omniscient—they can’t read your mind. They rely on the instructions you provide. The clearer and more specific your instructions, the better the output.

Start with You

You know your needs and goals best. Begin with your understanding and clearly articulate it to the LLM. By providing context and details, you help the AI understand and meet your expectations.

Level Up Your Prompting Techniques

Level 1—Main Components

Your journey starts here.

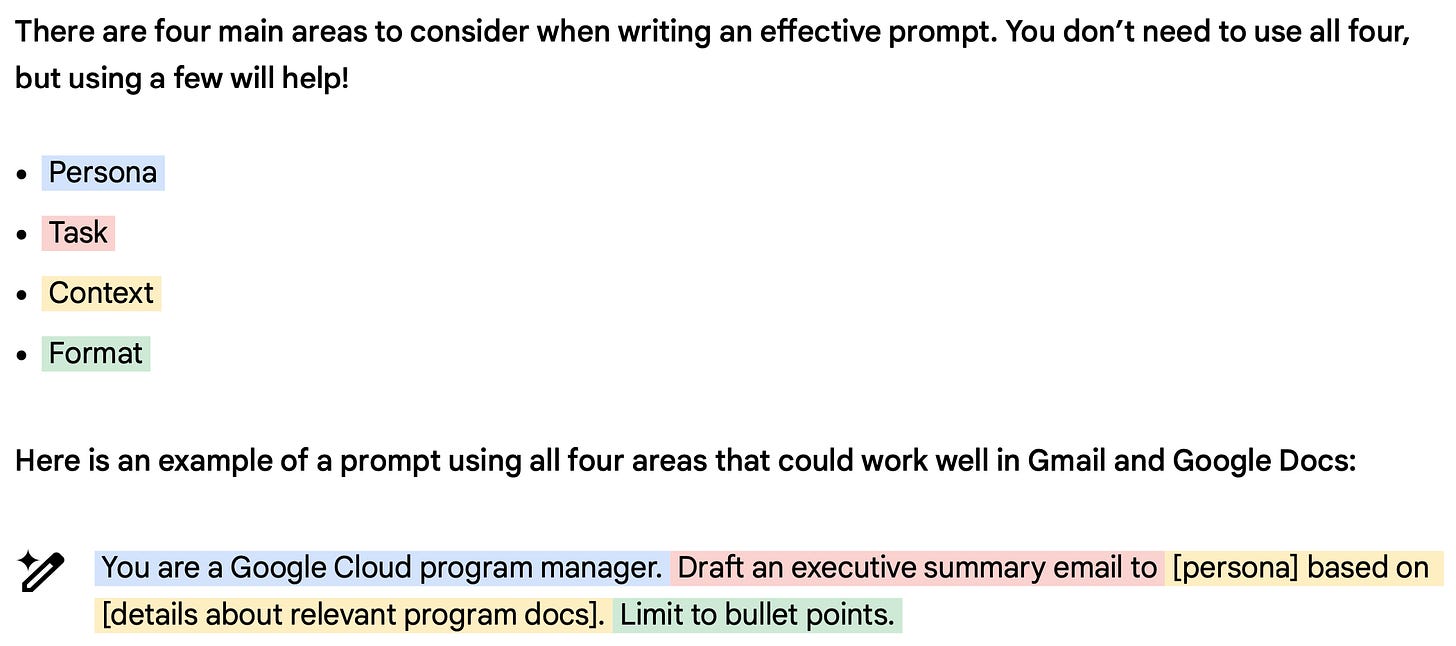

When crafting a prompt, it's essential to include three main components: the task, the context, and the output format.

Task

The task is what you want the LLM to do. For example, "write a cover letter." Be specific about the action you want.

Context

Provide relevant background information. For instance, "I am applying for a senior AI engineer position at OpenAI. Here is the job description: [include job description]."

Output Format

Specify how you want the response to be formatted. For example, "a one-page cover letter in a formal, conventional style." or “extract names and entities and answer with XML tags”.

By combining these components, you now create a sufficient prompt that guides the LLM to produce the desired outcome.

Level 2—AI character or Role-playing

One powerful technique for improving the performance of LLMs is to assign them a specific role. This technique, known as role-playing, can help the LLM generate more accurate and contextually appropriate responses.

Benefits of Role-Playing. Assigning a role to the LLM provides it with a clearer perspective and context, which can lead to more relevant and tailored outputs. When the LLM "assumes" a role, it draws on relevant information and style associated with that role.

Be Specific with Roles. Instead of giving the LLM a generic role like "helpful assistant," be specific. For instance, "Act as a senior HR consultant working in a world-class headhunter company." This specificity helps the LLM generate more precise and useful responses.

Here is an example.

Generic: "Act as a helpful AI assistant and write a cover letter for me."

Specific: "Act as a senior HR consultant working in a top-tier recruitment firm.“

Level 3—Formatting the Prompt

Structuring your prompts in a clear and organized manner can significantly enhance the LLM's understanding and performance. Here are some key techniques for formatting your prompts:

Organize into sections

Group parts of your prompt into distinct sections helps the LLM understand each part of the task. Common sections include the role, task, context, necessary inputs, and examples. (See example below)

Use of Tags

Using tags like XML or Markdown can further clarify the structure.

Act as a senior HR consultant. # Role part

I am applying for a role at OpenAI. # Context or situation part.

Write a cover letter for a senior AI engineer position. # Task part

The job description is the following: # context.

<job description>. # use of XML tag

[include job description here as text]

</job description>

Your output cover letter will be a one-page cover letter in a formal, conventional style. # Output specification partAnother example directly from Anthropic’s guide involves requesting the output to be formatted with XML.

Please extract the key details from the following email and return them in XML tags:

- Sender name in <sender></sender> tags

- Main topic in <topic></topic> tags

- Any deadlines or dates mentioned in <deadline></deadline> tags

<email>

From: John Smith

To: Jane Doe

Subject: Project X Update

Hi Jane,

I wanted to give you a quick update on Project X. We’ve made good progress this week and are on track to meet the initial milestones. However, we may need some additional resources to complete the final phase by the August 15th deadline.

Can we schedule a meeting next week to discuss the budget and timeline in more detail?

Thanks,

John</email>Templates

Templates are another effective way to guide the LLM. They also help remove extraneous words (such as “Sure! Here is the cover letter.”) from the LLM’s response.

Acting as a senior quality assurance officer, your task is to determine whether a given user review is good, neutral, or bad.

review: this movie is worth the money!

sentiment: good

review: i slept throughout. :'(

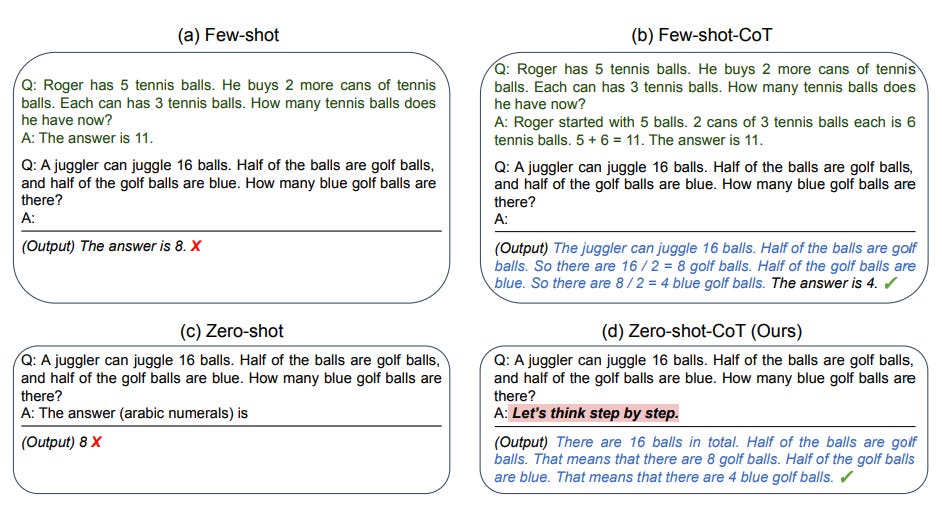

sentiment: Level 4—Providing Examples (Few-shot or N-shot Learning)

Few-shot learning or N-shot learning involves providing the LLM with a number of examples in the prompt so that the LLM learns to perform from given examples. This technique is useful because it (1) provide examples for the LLM to learn on the job and (2) sets clearer expectations about the desired outputs.

Building on the previous example, this one involves a more complicated output style. This kind of situation is suitable for N-shot learning.

Acting as a senior quality assurance officer, your task is to determine whether a given user review is good, neutral, or bad.

Here are some samples:

review: this movie is worth the money!

sentiment: 100% positive 0% neutral 0% negative

review: not too bad

sentiment: 0% positive 70% neutral 30% negative

review: meh

sentiment: 0% positive 30% neutral 70% negative

review: I loved it!

sentiment: Level 5—Chain of Thought Prompting

Chain-of-thought prompting is a technique where (1) you guide the LLM to think through a problem step-by-step and/or (2) you ask the LLM to think in steps. This helps the LLM apply logical reasoning and produce more accurate and coherent responses.

A magic keyword to activate chain-of-thought is “Let’s do this step by step.”, which is usually appended at the end of the prompt.

Zero-shot Chain of Thought

In this case, you do not provide examples and explicitly as the LLM to perform the task in steps on its own thinking.

Solve the following math problem step-by-step.

Problem: What is 24 divided by 6 plus 3 times 2?

Answer:Few-shot Chain of Thought

In this technique, you provide examples of how similar tasks are solved in steps. The LLM will learn and try to apply given ways of solving a task to finish its real task.

Other Tips and Techniques

Use natural language

Be concise

Be specific

Avoid jargons or technical terms. If unavoidable, provide brief definition.

Allowing LLM to feedback

Allowing the LLM to (1) ask clarifying questions or (2) identify gaps in your prompt will lead to a better prompt and a better result.

Ask for 3 options or variations to choose from.

Rephrasing your task if the LLM keeps getting it wrong

Edit your previous prompts when using ChatGPT or Gemini

Conclusion

In summary, crafting effective prompts for LLMs is both an art and a science. By understanding the importance of a good prompt, adopting the right mindset, and applying essential and advanced techniques, you can significantly enhance your interactions with AI models like ChatGPT and Gemini.

Key Takeaways:

Iterative Process: Don't aim for perfection in one shot. Refine your prompts over time.

Clear Structure: Use a structured approach to writing prompts, including task, context, and output format.

Role-Playing: Assign specific roles to the LLM to improve relevance and accuracy.

Examples and Templates: Provide examples and use templates to guide the LLM's responses.

Advanced Techniques: Use natural language, be concise, be specific, avoid jargon, allow for LLM feedback, rephrase tasks, and ask for variations to improve outcomes.

By applying these techniques and best practices, you'll be well on your way to mastering prompt engineering. Remember, the key is to experiment, iterate, and continuously improve. Happy prompting!

P.S. This blog is assisted by GPT-4o. I started the structure of the blog, and ask GPT-4o to criticize, suggest, and fill in details. The following is my prompt:

You are an award-winning blog writer, and you will help me write my blog. This blog is about best practice and guide in prompt engineering.

The final blog should target the general audience. Therefore, keep the language easy, concise, and friendly. If any, technical words should be used but must be accompanied by short explanation.

I have started the structure of my blog, provided some talking points, and left places to fill more details in.

<blog>

<section 1> <untitled>

- why a good prompt is critical to work with LLM?

- Quote from gurus:

- Andrew Ng said that we should treat LLM as a fresh graduate to help us with tasks. So we need to tell them clearly what they need to do and even break down how to do it (i.e. providing guidelines). He also mentioned that many well-performing LLM systems have a long system prompt.

- Google found that a good prompt has an average of 21 words in it. (But I suspect that this number excludes things like examples for the LLM to understand the task)

- In this blog, best practice for prompting can be applied to both (1) using ready tools like ChatGPT or Gemini and (2) building custom LLM-based apps.

<section 2> Mindset in writing prompts

- Don’t aim to be perfect in one shot: you need to iterate and improve.

- Playground mindset: start quick and broad, then narrow down until you are satisfied.

- LLM AI is not god and it cannot read your mind.

- You know your mind best, start there and tell LLM.

<section 3> Prompting techniques in Levels

<section 3.1> Level 1-Main Components

- Main components are

- Task, e.g. write a cover letter

- Context, e.g. I am applying to a senior AI engineer at OpenAI. Here is the posted job description. <…>

- Output format: a one-page cover letter, conventional and formal style.

<section 3.2> AI character (role-playing)

- Adding a role makes LLM perform better <why and how???>

- Don’t be generic, like “Act as a helpful AI assistant…”

- Be specific, like “Act as senior HR consult working in a world-class head hunter company…”

<section 3.3> Formating prompt

- Organize sections in the prompt, like role section, task section, context section, example section.

- Use XML tags <give examples>

- Templates make LLM understand better. “Q: <example question> A: <example answer> Q: <real question> A:”

<section 3.4> Providing examples (a.k.a few-shot learning or N-shot learning)

- <explain what this is>

- <give example>

<section 3.5> Chain of Thought prompting

- This helps LLM think sequentially and apply logic through steps it takes rather than forcing the final right out.

- This is akin to ‘multi-step prompt’ where you explicitly break down a complex task into many simpler tasks. This helps LLM handle the complex task better.

- <give example of chain-of-thought prompting>

<section 4> Other techniques and tips

1. Allow LLM to ask clarifying questions or reflect on your instructions before proceeding.

1. This likely helps you identify gaps in your previous prompt so that you can improve it.

2. If the LLM still fails, you could try to phrase your task differently.

3. Ask for 3 variations of things, so you can pick the best one. This is like giving LLM chances to think multiple times.

4. When using ChatGPT or Gemini and similar tools, you can go back to edit your previous prompts!

<section 5> Universally Applied Things

- Use natural language

- Be concise

- Be specific

- Change ‘write me a good cover letter’ to ‘write me a cover letter which meet these specific points: 1… 2… 3…’

- Avoid jargons or technical terms, or you should provide their definitions.

</blog>

Here is the list of subtasks you should do

1. Analyze the blog draft, and criticize how to improve the presentation (i.e. flow of presenting the ideas)

2. You may suggest new sections, rearrangement, or etc, about the blog structure.

3. For each section, identify key points and actions (such as filling more info, providing examples) for each section.

4. Suggest 3 alternative titles for each section.

5. Start writing each section from bottom to top

You are going to work with me through these steps, one step at a time.References

Google’s Prompting Guide 101 (link)

Meta’s Prompting Guide (link)

OpenAI’s Prompting Guide (link)

Anthropic’s Prompting Guide (link)