gpt-oss Models Will Boost Agentic AI Development

OpenAI re-enters open-source community with `gpt-oss` that is created for agentic application.

I believe the release of the gpt-oss models is a step forward for anyone building Agentic AI applications. Partly, the benefit is the ability to host the models locally (like with Ollama). Unlike closed-source, API-only models, these can be run directly on your own infrastructure, which gives you full control over the model.

Highlights

Built for agentic application: `gpt-oss` is built for agentic application from the start.

Ensure Data Privacy and Security: For businesses in regulated industries like healthcare or finance, sensitive data can be processed on-premises without ever leaving the company's network, which is crucial for compliance.

Enable Offline and Edge-Device Applications: The smaller

gpt-oss-20bmodel, which needs only 16 GB of memory, is perfect for on-device use cases, allowing for the creation of agents that can operate offline.Customization: The Apache 2.0 license also allows for extensive fine-tuning and customization to create specialized agents tailored to specific tasks or domains.

Summary of gpt-oss Models

OpenAI has released two new open-weight language models, gpt-oss-120b and gpt-oss-20b, under the flexible Apache 2.0 license.

Performance:

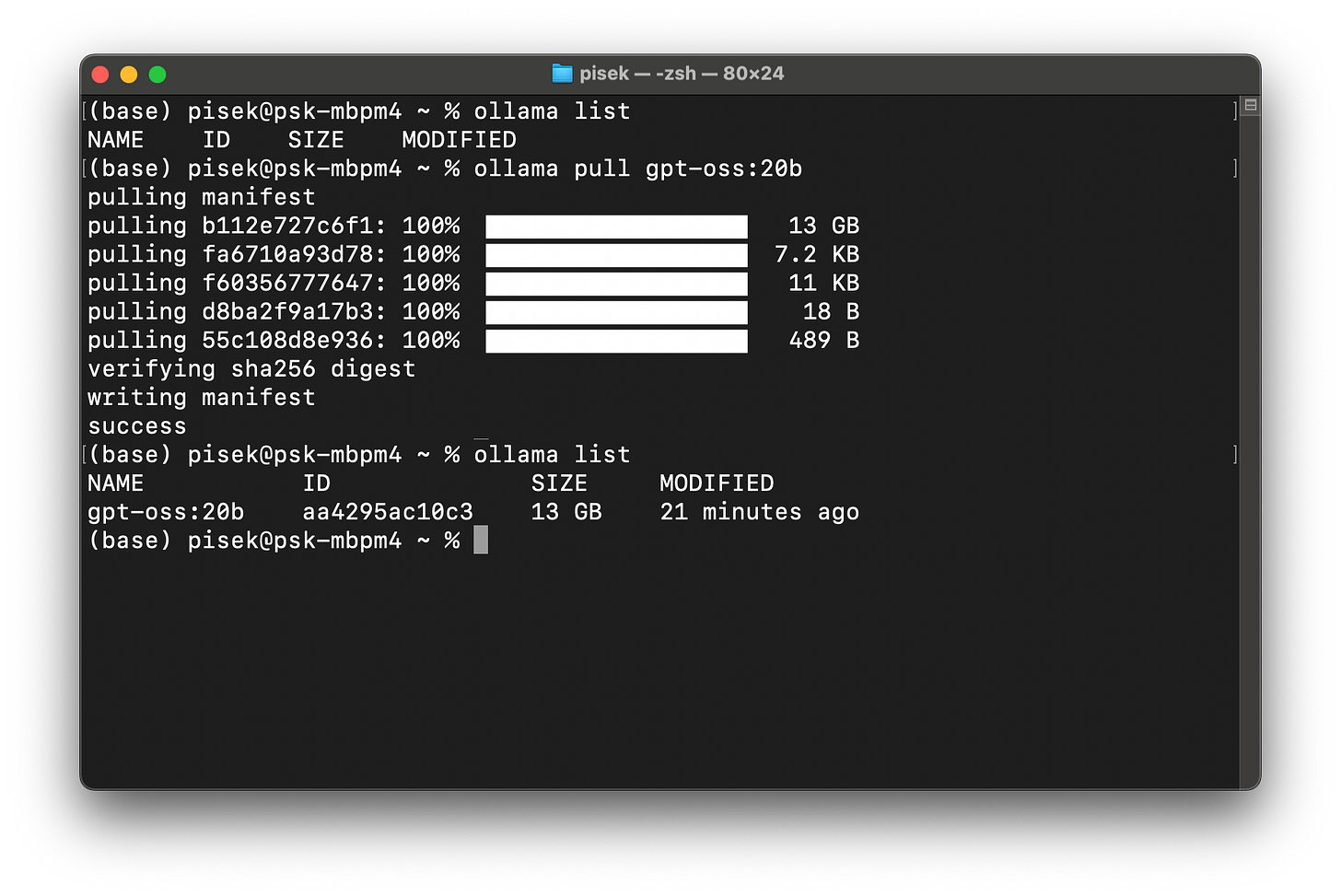

gpt-oss-120bperforms similarly to the proprietaryo4-minimodel, whilegpt-oss-20bis comparable too3-mini. Both excel in reasoning, few-shot function calling, and tool use.Hardware Efficiency: The models are optimized for consumer hardware. The larger model can run on a single 80 GB GPU, while the smaller one needs just 16 GB of memory. (I was able to run the 20b version in my macbook pro m4 with Ollama).

Architecture: They are Transformer models that use a Mixture-of-Experts (MoE) architecture for efficiency, which allows them to activate only a subset of their total parameters for each token.

Functionality: They are built for agentic workflows with strong instruction-following, tool use (like web search and Python code execution), and adjustable Chain-of-Thought (CoT) reasoning. They support structured outputs and come with a context length of up to 128k.

Availability: The models are available on Hugging Face. They come pre-quantized in MXFP4, which helps them fit within the specified memory requirements. A crucial note for developers is to not display the model's CoT directly to end users, as it may contain unverified or harmful information.

Run it with Ollama

I myself have tried running gpt-oss:20b version on my MacBook Pro M4 (late 2024, with 24 GB memory), and it was functional!