GPT-4o: 7 Highlights from OpenAI Demo

Top Things You Need to Know in May 2024 + Expanded Commentary.

On May 13, 2024, GPT-4o, where 'o' is for 'omni', is introduced to the world by OpenAI. The YouTube live demo video received 2.9 millions views just a day after. This is phenomenal, both the frenzy of the world and the demonstrated GPT-4o's capability.

1. GPT-4o will be available to 'everyone'.

Yes, everyone can use it free! Subscribing members will receive more perks, like usage counts and priority.

2. Desktop app is here! (Also Improved Web UI)

Well, at least for the macOS user now, ChatGPT native app is here!

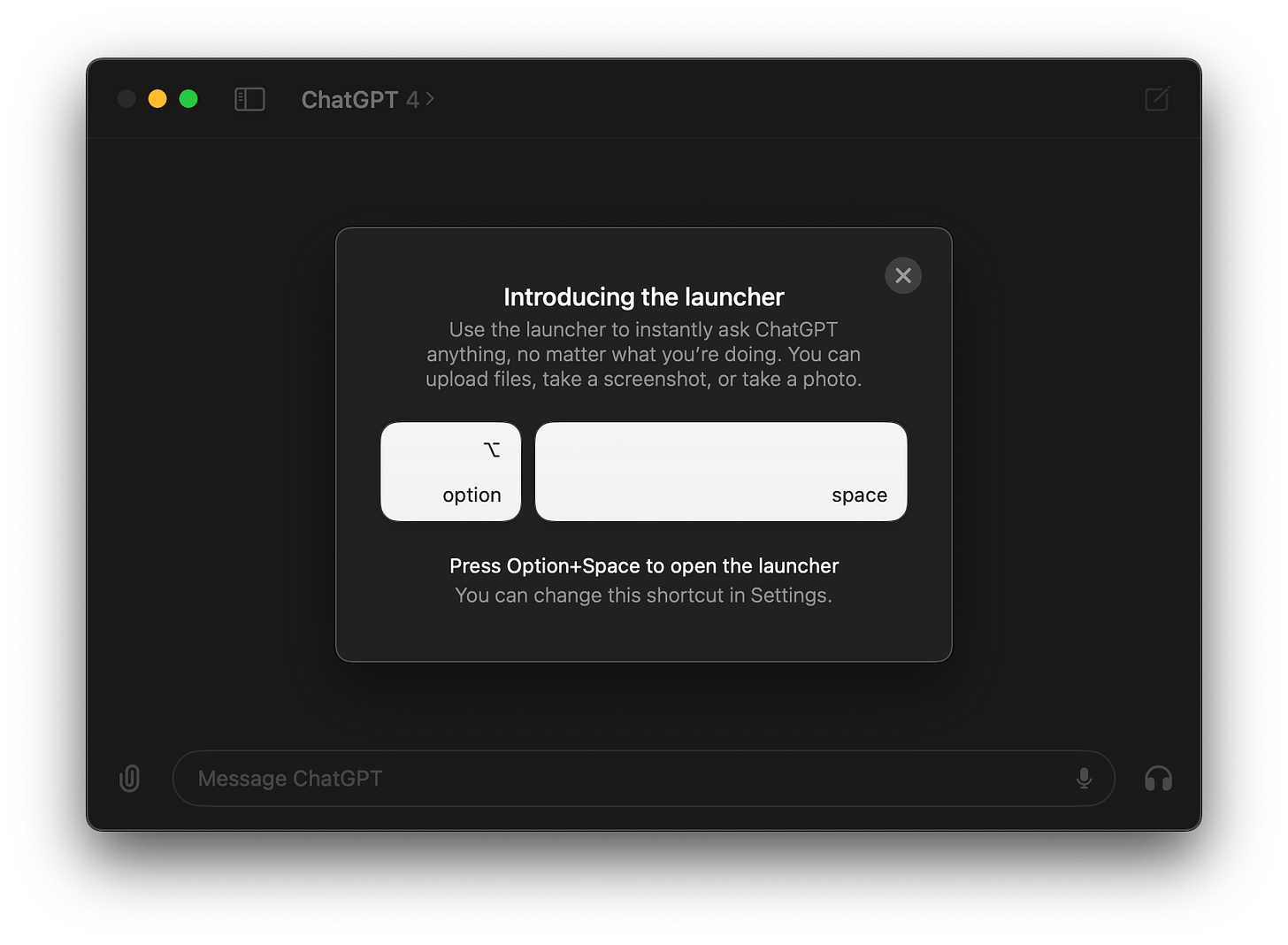

You have the 'launcher', which is a quick way to send a message to ChatGPT to start a chat. Default short cut is Option+Space.

You can interact with the desktop app in the following ways.

Input with text as usual

Upload files

Take screenshots

Take photos

Chat with voice

Copy and paste text to it when in voice mode

👀 watch that moment here,

3. GPT4-level Intelligence but Faster!

OpenAI team claims that they make sure GPT-4o must be at least on par with GPT-4, its predecessor, in terms of 'intelligence' performance.

They extended their claim that in fact GPT-4o has upgraded performance in math and coding in combination with 50 non-English languages.

👀 watch that moment here.

4. Multi-modal inputs much more natural to human user.

GPT-4o can take in texts, voice, image, and video feed at the same time, making it perceive more like how human would to. Its speed upgrade also reduces time lag between the user's action and the model's response. This ultimately manifests in AI that could interact with humans in a way that humans are familiar.

👀 watch that moment here,

or here when a guy ask GPT-4o to solve math written on a piece of paper.

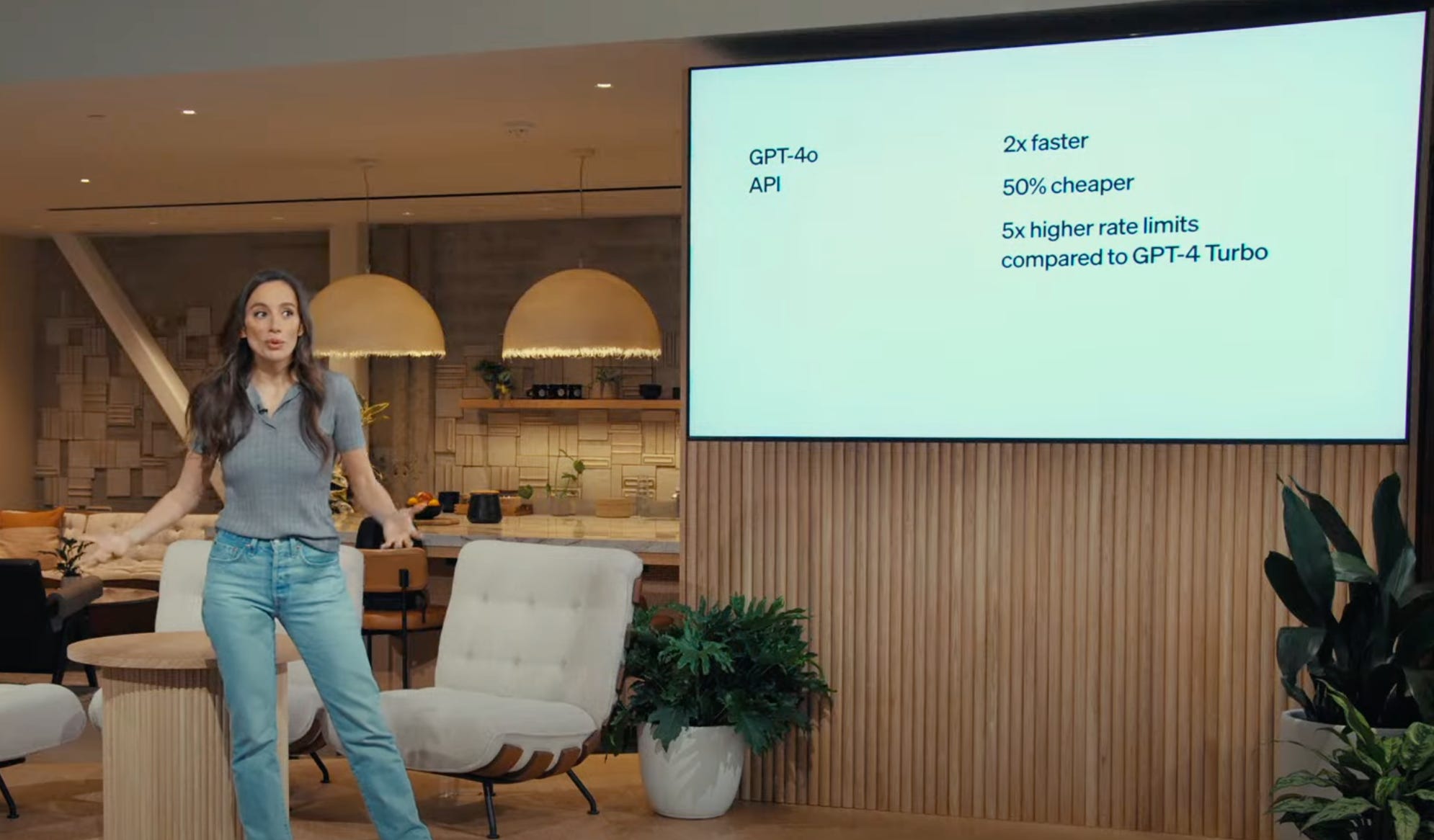

5. API use sees Christmas much early

2x faster

50% cheaper

5x higher rate limit

👀 watch that moment here.

6. Emotion-enabled AI Chatter!

GPT-4o can guess the emotional state of the speaking user. More impressively, its demonstrated response to the user was so awesome!

👀 watch that moment here,

or here when GPT-4o tried to calm a guy Mark who was panting crazily,

or here when GPT-4o tried to give a bed time story, showing different tone of voice,

or here again when a guy asked GPT-4o to guess his emotion based on his facial expression

7. You can interrupt when it's speaking

Response speed reported to be around ~300 ms, you can interrupt GPT-4o when it is responding, similar to the way a person interrupts another person. This is great because you now do not have to wait for it to finish its work.

👀 watch that moment here.

It is a GREAT demo, shining in both tech advancement and showmanship!

To me, GPT-4o could be the most advanced generative AI in the public world as of May 13, 2024. Is it worth $20 monthly subscription? Yes, I think so.

As the model is more advanced in both intelligence and human interaction, it opens more doors to adoption and applications. I’m excited to see how people will now use GPT-4o!