Google’s Gemini 2.0: Pushing the Boundaries of AI with Multimodal Power and Smarter Agents

Quick summaries on Google's announcement of Gemini 2.0 in December 2024.

Google’s DeepMind team just unveiled an exciting leap forward in AI with the announcement of Gemini 2.0, a new generation of models designed to take intelligence, reasoning, and interaction to the next level. As someone who’s followed AI advancements closely, I find this announcement particularly thrilling. From its ability to handle multimodal inputs and outputs to enabling advanced agents that can truly assist in everyday tasks, Gemini 2.0 promises to redefine how we work with AI. Here’s a breakdown of what you need to know. As I explore this announcement, I’ll share why I think it’s a big deal for AI.

What is Gemini 2.0?

Gemini 2.0 builds on Google’s vision for AI that doesn’t just understand commands but can actively help you achieve your goals. Personally, I think this is where AI starts to feel less like a tool and more like a true collaborator. The first release in the Gemini 2.0 family, Gemini 2.0 Flash, is now available as an experimental model. It’s accessible to developers through the Gemini API in Google AI Studio and Vertex AI. General availability is coming in January, with more model sizes on the way.

Here’s what makes it stand out:

Multimodal Inputs and Outputs: Gemini 2.0 Flash can work with images, video, and audio inputs, and produce text, generated images, and even steerable text-to-speech audio.

Tool Integration: It can call tools like Google Search, run code, and use third-party functions—all natively.

Advanced Personalization: With improved memory capabilities, it can remember conversations for better context and personalization.

Projects Powered by Gemini 2.0

Gemini 2.0 isn’t just a model; it’s a platform where Google DeepMind experiments with innovative AI capabilities. These projects aim to explore and improve AI for more complex, natural use cases. Here are some of the big initiatives it’s driving:

1. Project Astra: Agents for Everyday Life

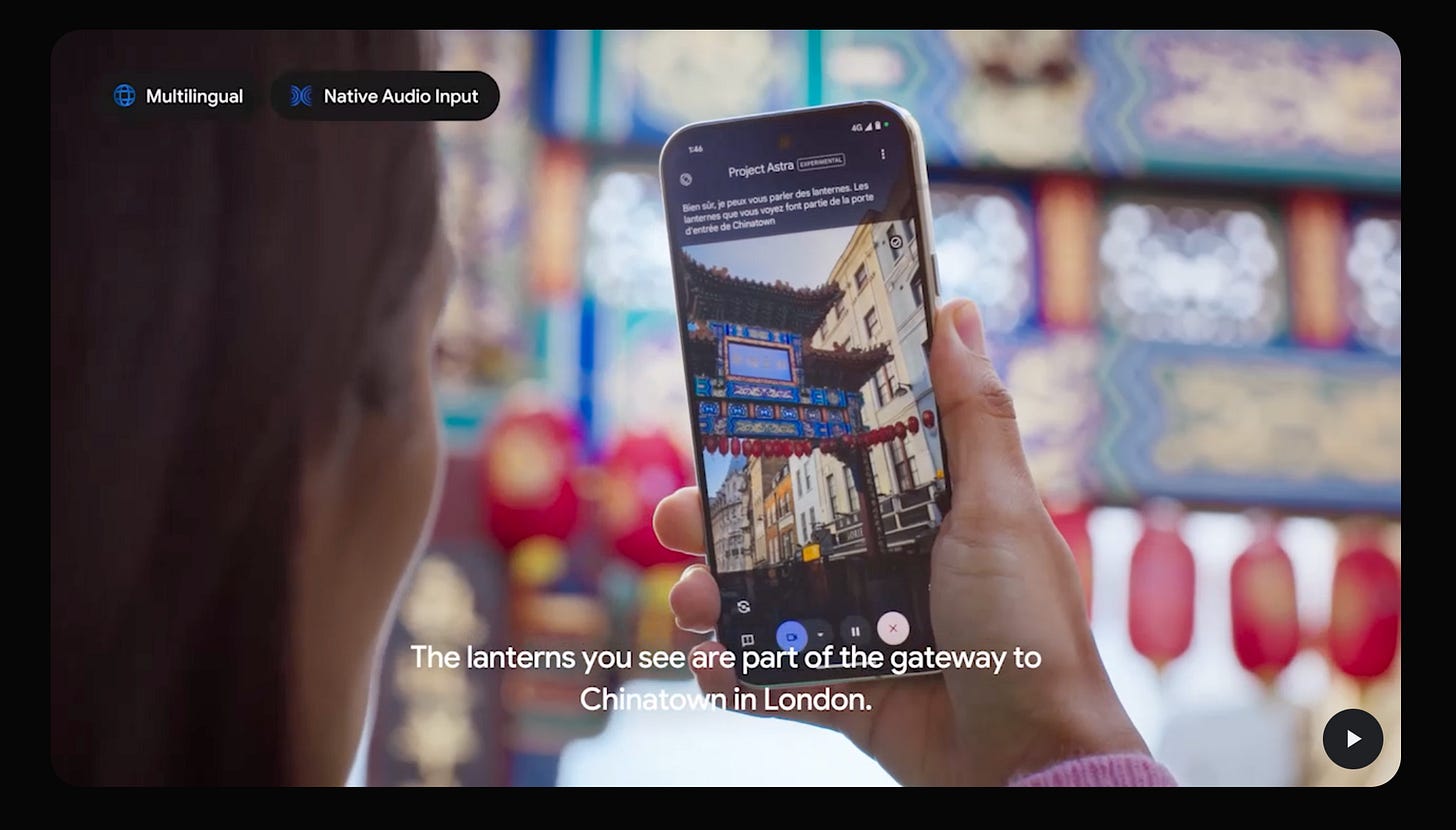

Project Astra is one of the most ambitious initiatives powered by Gemini 2.0. Designed to enhance real-world interactions, Astra takes a holistic approach to integrating AI into daily life. Its standout features include:

Multimodal Understanding: Astra combines continuous streams of audio and visual input to comprehend real-world environments seamlessly. For instance, it can identify objects through a smartphone camera and provide context-based information.

Multilingual Dialogue: Converse fluently in multiple languages, even switching between them in a single interaction. Astra also adapts to accents and uncommon words, ensuring smooth communication.

Prototype Glasses Integration: DeepMind is testing Astra on prototype glasses, where the assistant sees the world as you do, offering real-time insights and assistance.

Smarter Tools: With access to Google Search, Lens, and Maps, Astra’s agents are better equipped to assist in daily life.

Improved Memory: Astra agents can remember conversations from your current session and previous ones, personalizing their responses to you.

Faster Responses: Thanks to low-latency streaming, the agents feel as responsive as talking to another person.

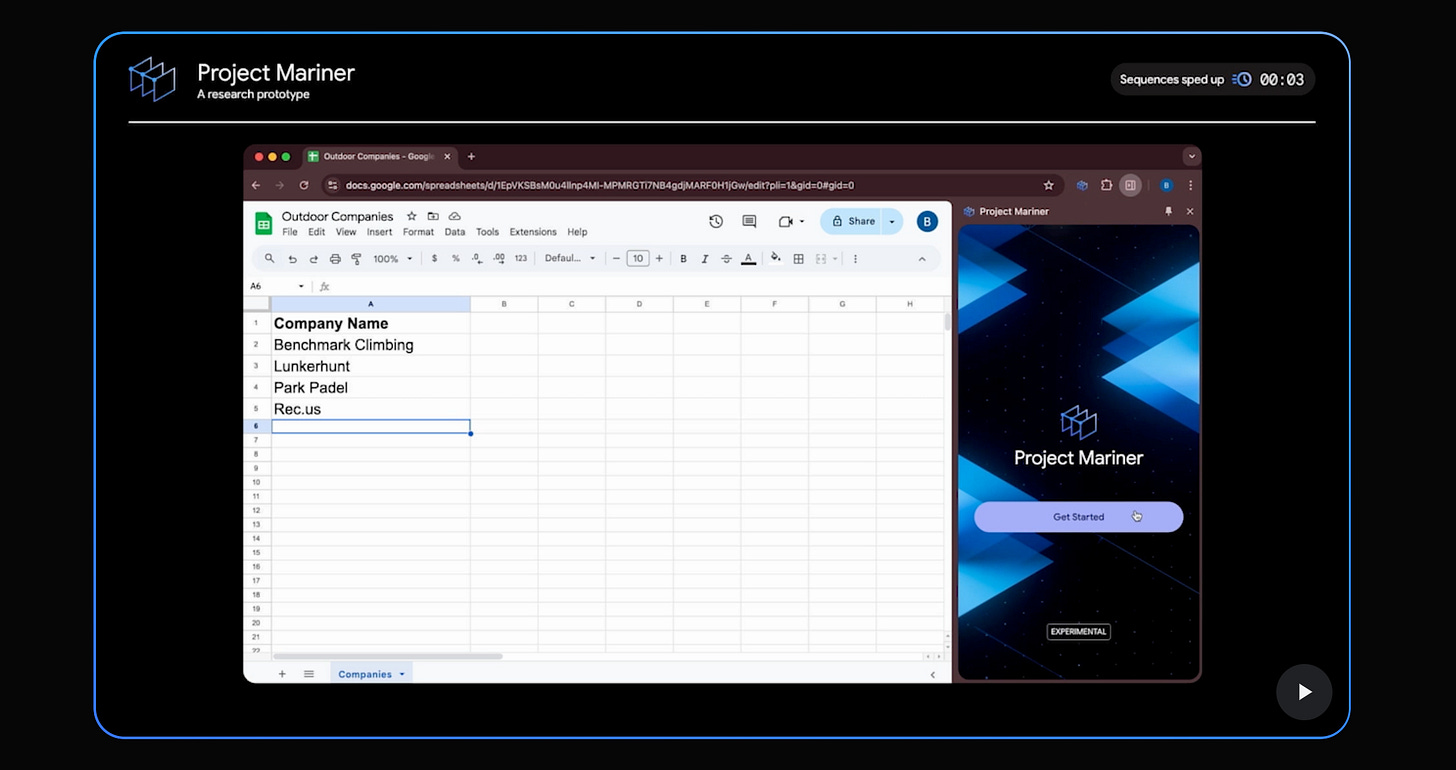

2. Project Mariner: Advanced AI for Web Navigation

Ever wished for an agent to navigate your browser like a pro? I know I have, and Project Mariner might just be the solution. It’s a research prototype that leverages Gemini’s advanced reasoning to understand everything on your screen—from text to web elements—and complete tasks for you. Mariner’s standout feature is its experimental Chrome extension, which makes it easy to execute complex web-based tasks with precision. Its incredible performance has earned it a leading score of 83.5% on the WebVoyager benchmark, showcasing its potential for transforming web-based workflows.

3. Deep Research: Your Personal AI Think Tank

Deep Research leverages Gemini 2.0’s long-context capabilities to act as a tireless assistant for exploring complex topics. Whether compiling reports or reasoning through intricate problems, it’s designed to save you hours of work. I’m especially impressed by how it can handle such complex tasks seamlessly.

Key Technical Advancements in Summary

Multimodal Power: Gemini 2.0 doesn’t just process text; it understands and generates images, audio, and mixed outputs. It even offers multilingual, steerable text-to-speech.

Tool Use: It can call tools like Google Search, execute code, and interact with user-defined functions—a huge leap for productivity.

Memory and Personalization: With up to 10 minutes of session memory and recall of previous interactions, it’s smarter and more personalized.

Human-Like Speed: Its low-latency response times make interactions feel natural and seamless.

What Does This Mean for You?

If you’re a developer, researcher, or simply an AI enthusiast, Gemini 2.0 opens up a world of possibilities. Imagine:

An assistant that can plan your day, answer complex queries, and help with tasks across languages.

Tools that reason like a human while harnessing vast computational power.

Smarter agents that make browsing, searching, and even creative projects faster and easier.

Starting today, you can explore Gemini 2.0 Flash’s experimental capabilities through Google AI Studio and Vertex AI. I’d encourage you to give it a try and see the potential for yourself—I know I’m eager to dive in. As the general release rolls out in January, expect even more model sizes and expanded access.

A Friendly Step Toward the Future

Google’s Gemini 2.0 is more than a technology milestone; it’s a step toward a future where AI feels truly collaborative and helpful. By enabling smarter agents, more natural conversations, and deeper reasoning, Google is setting the stage for AI that can truly partner with you.

I’m excited to see how this unfolds. Ready to dive in? The future of AI starts now with Gemini 2.0 Flash.

Sources: